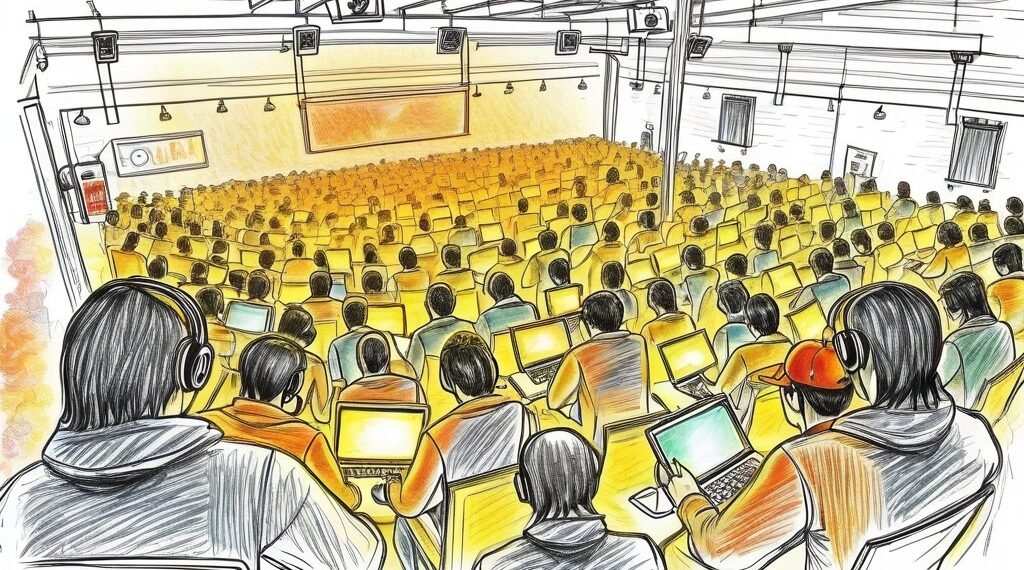

Last month, more than 600 hackers came together to compete in a hacking arena to trick popular AI models into producing illicit content. This event was put on by a security startup called Gray Swan AI, which is developing tools to ensure the safe deployment of intelligent systems. Gray Swan was founded by a group of computer scientists last September, and the company has already established noteworthy partnerships and contracts within the industry. They are working to provide practical solutions for addressing issues in AI safety and security.

The founders of Gray Swan have also been conducting research on identifying unique safety concerns related to AI. They have found that it’s possible to defend AI models from security breaches, and they have been successful in building safety measures to combat these threats. The team at Gray Swan has developed a proprietary model called “Cygnet,” which uses “circuit breakers” to strengthen its defenses against security breaches. This method has been proven to be effective in preventing illegal actions by AI models. Gray Swan has recently received $5.5 million in seed money and is planning to raise even more capital through its Series A funding round. The company is also working to build a community of hackers to help address growing threats to AI security.

The ainewsarticles.com article you just read is a brief synopsis; the original article can be found here: Read the Full Article…